The landscape of Android screenshot testing in 2023

A recap on screenshot testing in the Android ecosystem#

As I described in my previous article, screenshot testing belongs to the context of non-functional tests, i.e., verifications that don’t target the critical value we should provide to final users.

Screenshot tests target the static state of a View Hierarchy, which is eventually constrained to an existing Design System component or an entire screen that our users interact with. They deliver value when they catch visual bugs in automated verifications executed during the SDLC in a shift-left fashion (e.g., fulfilling CI checks).

The practice of screenshot testing in the Android ecosystem has a curious history. Facebook was the first player to introduce a comprehensive open-source solution for screenshot tests for Android back in 2015. Shopify also presented Testify in 2016, the same year former Karumi folks shared Shot with the broad community.

All the solutions above have one thing in common: a direct dependency on the Android/Instrumentation as the environment to drive such tests. As a practical implication, this means having a physical device or an Android emulator available during the test execution, which might be challenging even in 2023.

That was the status quo until 2019 when Square/CashApp Engineers presented Paparazzi and challenged the hard dependency on Android/Instrumentation from previous solutions by using an innovative approach that allows screenshot tests to execute as a plain-old JVM unit test. Paparazzi matured since then, being adopted by prominent players in the industry.

More recently, another kid appeared on the block: Dropbox folks shared Dropshots with the world in 2022, a tool that still relies on Android/Instrumentation to drive screenshot tests but brings an innovative take compared with the early days of community-driven solutions.

Several parts of this history derive directly from the fact that Google never offered official support for this particular use case inside androidx.test. Despite that, any tool brings trade-offs when adopted in a project, especially in huge ones, and all solutions mentioned earlier are no exception to this rule of thumb.

Defining the contenders and the comparison criteria#

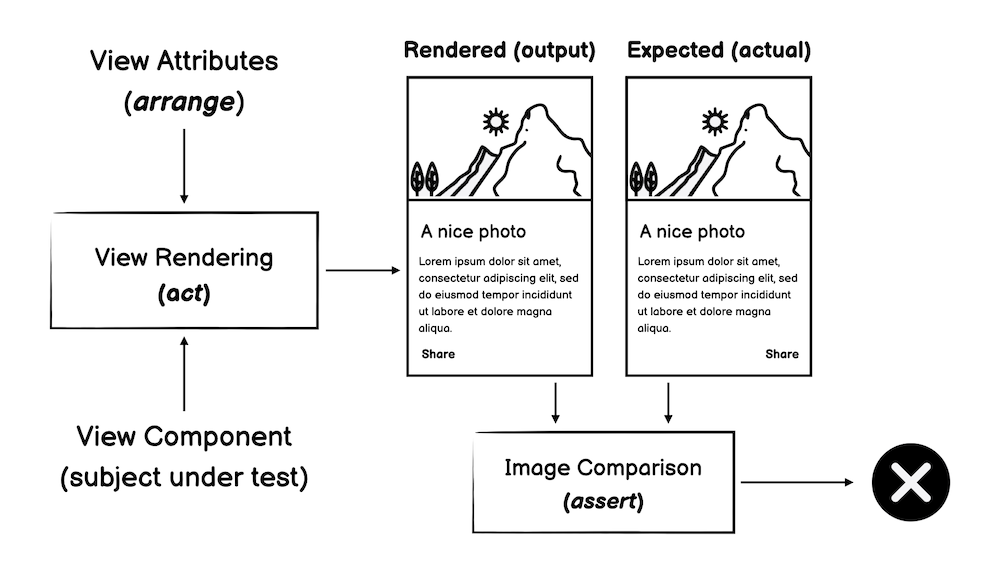

As highlighted in my previous article, a well-designed screenshot test always verifies a View Hierarchy in isolation, treating it as the subject under test.

This subject is arranged with specific attributes and rendered during the test execution; the rendered View is captured and compared against the expected output, usually on top of tooling that performs pixel-by-pixel image comparison.

There are several dimensions of comparison we can evaluate when reviewing available libraries that enable screenshot testing for Android, for example:

- How an Engineer can get screenshot tests working in an Android project

- Everything related to the execution of tests, which should ideally be as fast as possible

- The capability of testing against different scenarios for the same screen state, e.g., night/light mode

- The screenshot accuracy considering the final user’s perspective

- And many more!

With that being said, in this article, we’ll explore three available libraries that enable screenshot testing for Android:

using four different criteria:

- The required setup for a build system and test system

- Test execution and test environments

- Rendering Views and capturing screenshots

- Expected outcomes and image comparison strategies

We’ll evaluate a few implementation details they embrace and examine the pros and cons of each from the project’s integration perspective. Ultimately, the analysis should work as a starting point for Android Engineers who want to adopt screenshot testing in a project.

Setup for the build system and test systems#

The first criteria we’ll analyze relate to how easily with can start with screenshot testing, i.e., how much effort we need to integrate the target library, both from a build system perspective and test tooling perspective.

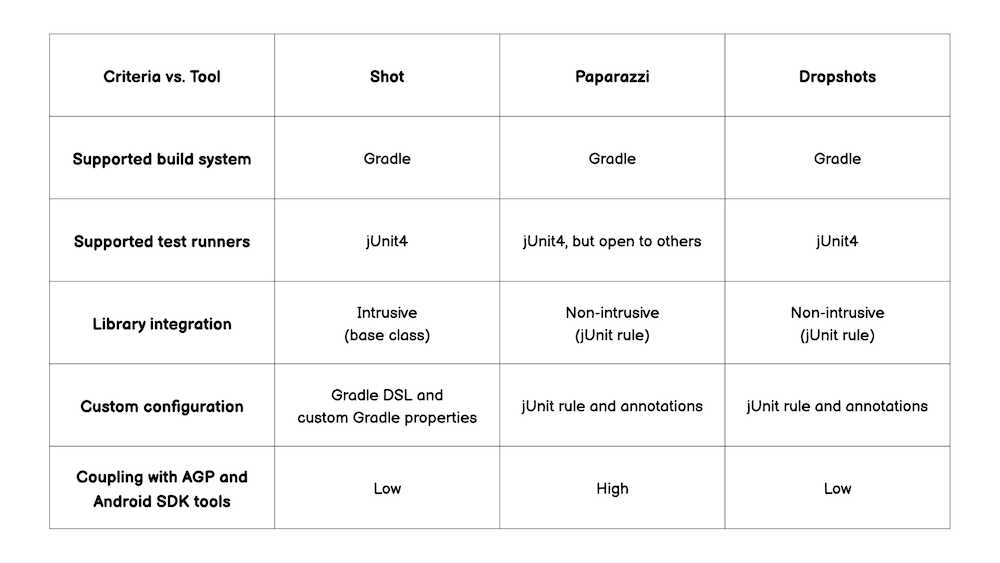

The table below shows my evaluation of the following questions:

- Which build systems are supported (or not)?

- How coupled is the solution with existing Android tooling?

- Which test runners are supported (or not)? Which mechanisms to integrate that library do we have, and how easy is it to customize library behavior?

As you can see, Shot, Paparazzi, and Dropshots are somewhat similar since they only support Gradle as a build system and integrate with it using companion Gradle plugins. In addition, they all support only jUnit4 as the Test Runner.

In the case of Paparazzi, it can work with other Test Runners since test execution happens over JVM/Hotspot rather than Android/Instrumentation. There were propositions already, but Square/CashApp folks prefer to support only jUnit4, which is the right take in my opinion.

From the three options, I see Shot as the one with the most annoying setup: you need to inject test configuration with a Gradle DSL; it requires a custom configuration to differentiate between test code living under Android library modules or Android application modules, and it also forces you to inherit from a base class to interact with the library, something I find more intrusive and less composable compared with plain-old jUnit rules.

However, unlike Paparazzi, Shot and Dropshots are not strongly coupled with the Android Gradle Plugin and its underlying Android SDK. In my experience, Paparazzi is super sensitive to moving parts contrived by the official Android tooling.

Such long-distance fragility relates to Paparazzi’s design choices (more on later). I failed to get Paparazzi fully working on a large Android project with a non-trivial setup I work on. In addition, I saw it not working with AGP updates a few times in some of my pet projects, effectively blocking the adoption of the latest version of AGP. Hence, one should keep that in mind, since there is a major version of AGP coming this year.

Test execution and testing environments#

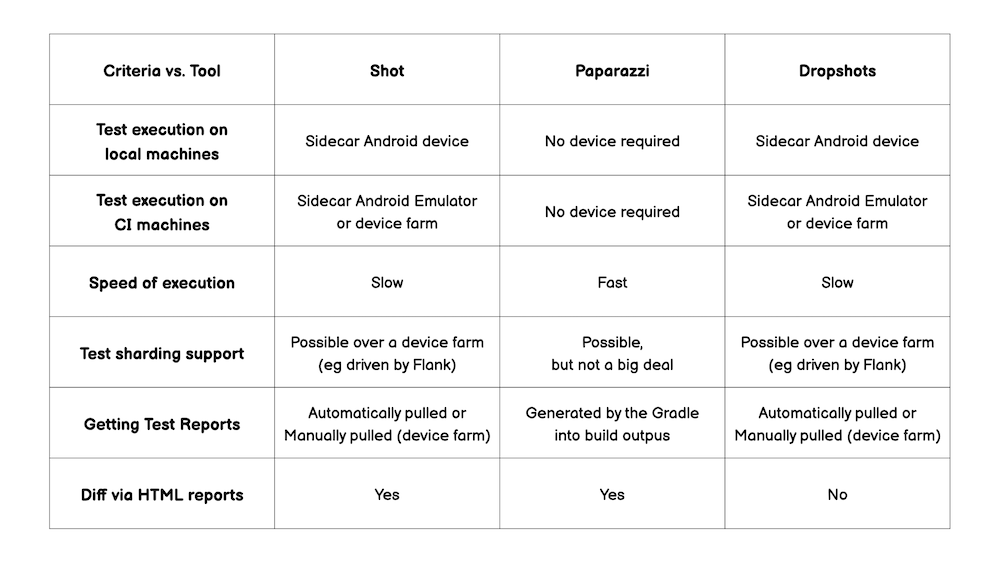

The following criteria we’ll analyze relate to testing executions and testing environments. The table below shows my evaluation of the following questions:

- What are the requirements to run tests on local machines? Are such conditions the same for CI machines?

- How fast do such tests execute? What would be the impact of having hundreds or thousands of screenshot tests in a test suite? Can we run tests in parallel with test sharding?

- How do we retrieve test reports?

As we can see, Shot and Dropshots are identical for all considered points: both require a sidecar Android device when a Gradle task drives the execution, and both support skipping the invocation of the Android/Instrumentation process, which allows off-loading the test execution to a device farm like Firebase TestLab (a solid option for CI).

Considering test executions in the local machine - the typical scenario for Engineers during SDLC - both Shot and Dropshots deliver slower feedback than Paparazzi. Paparazzi’s design enables screenshot testing over JVM/Hotspot, which represents faster executions due to several reasons: there is no need to build test APKs wrapping the test code, no ADB invocations, no intensive data transfer via USB or Wifi, no Instrumentation process instantiation, etc.

All the libraries allow easy retrieval of jUnit/Jacoco reports when running tests through Gradle as part of build outputs. However, manual pooling of artifacts usually applies if a device farm is the environment instrumenting test code driven by Shot or Dropshots.

In addition, Test Sharding over a Firebase TestLab is a feasible option for these two tools, on top of tools like Flank, which may significantly speed-up execution when running screenshot tests in CI environments.

Rendering Views and capturing screenshots#

The next criteria in our analysis relate to how the tooling drives the “act” part of a screenshot test, i.e., how the target View hierarchy gets rendered and captured into an image for further evaluation.

This topic is vital since it has practical implications for running tests at scale, especially in an everyday context where Engineers develop using Apple hardware (nowadays, mostly Apple Silicon) and validate screenshots at Linux boxes running on top of x86 hardware at Continuous Integration.

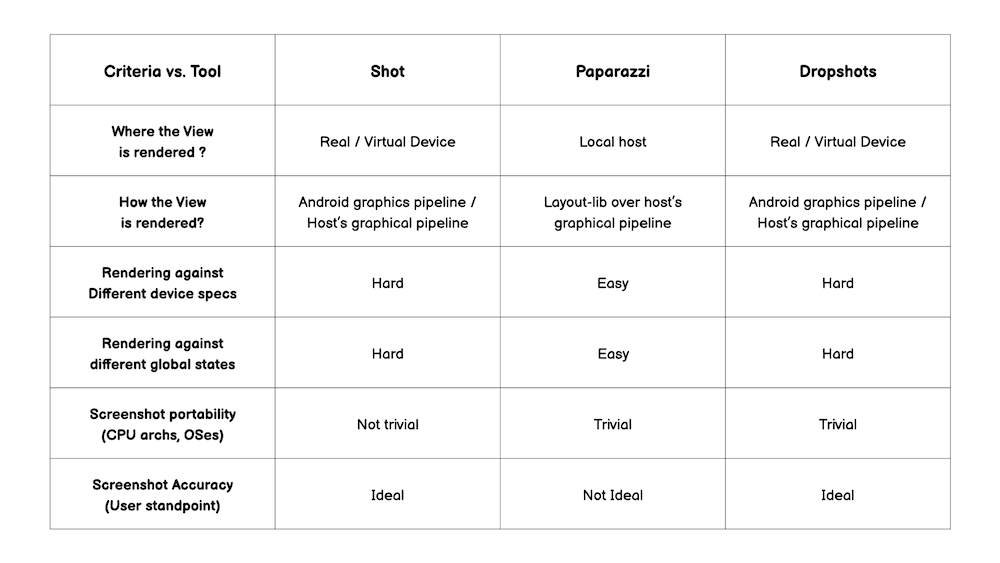

The table below shows my evaluation of the following questions:

- Where is the subject under test rendered?

- How is the target View hierarchy rendered?

- How easy is rendering against different device specifications (e.g., varying device dimensions) and further device states (e.g., dark/light mode, portrait/landscape orientation, etc.)?

As we can see, Shot and Dropshots render View hierarchies using the actual Android graphics pipeline. However, the rendering process might produce different outcomes if the pipeline executes in the Android Emulator rather than a physical device since the usual configuration for Android Emulators opts for hardware acceleration, meaning that part of the rendering happens in the localhost’s hardware.

This fact profoundly impacts how portable the captured screenshots are between different CPU/GPU hardware, given that Android Emulators are the most trivial option to use fixed device specifications (width, height, screen density) when testing. This issue in the Shot repository offers more insights into tackling this problem. Yet, Dropshots improves on this issue by generating and comparing screenshots in the same place. More on that later.

In addition, changing the device state at the testing time is not trivial when leveraging physical or emulated devices. In addition, targeting other device specs means testing against another device, which could be more accessible in the local workstation and CI machines.

On the other hand, Paparazzi delivers another approach, rendering Views on top of layout-lib, the same component used to preview layouts on Android Studio. The rendering process happens on top of the classical java.awt package, running over JVM/Hotspot rather than Android/Instrumentation. Thus, it conveys more predictable outcomes despite the underlying hardware.

On top of that, this design decision allows Paparazzi to have screenshot tests running over jUnit5 or other Test Runners eventually, as mentioned previously. It also means we can easily define device modes or even parametrize device specs at testing time. However, the fact that Paparazzi effectively replaces the entire Android graphics pipeline with a fake one has consequences, as we’ll see.

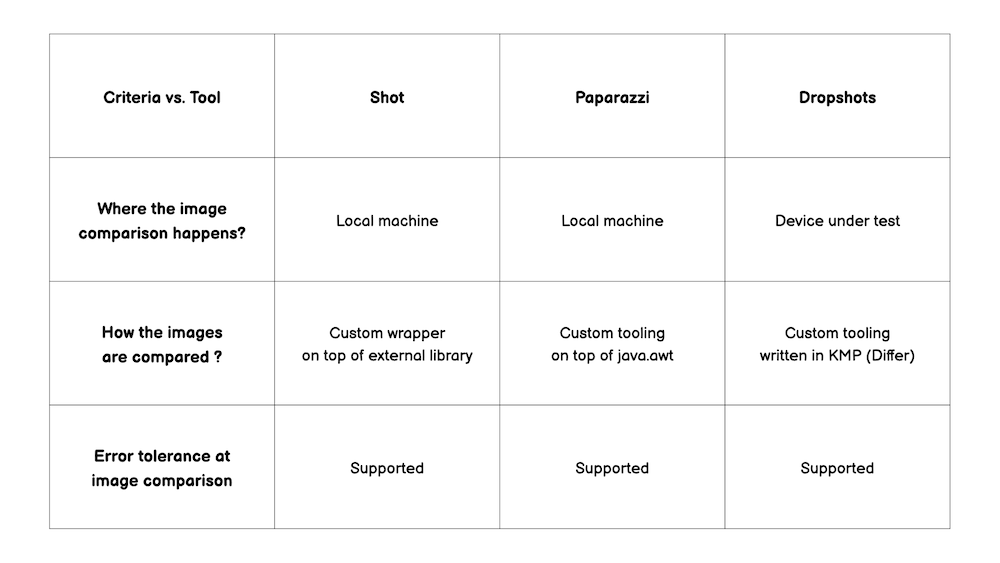

Expected outcomes and image comparison strategies#

The final criteria in our analysis relate to how the tooling drives the “assert” part of a screenshot test, i.e., how the captured rendering evaluates against the expected outcome.

The table below shows my evaluation of the following questions:

- Where does the image comparison happen?

- How are the screenshots compared?

- How accurate are the generated images from the final user’s perspective? Is there any support for injecting error tolerance when comparing images?

As we can see, these comparison criteria highlight some differences between Shot, Paparazzi, and Dropshots libraries. Even though all libraries support a tolerance factor when comparing the captured screenshot against the expected outcome, all libraries also employ different mechanisms to perform pixel-by-pixel matching, either by implementing a custom image comparator or wrapping an existing one.

In addition, the place where such processing happens differs, with a clear shout-out to Dropshots, which adopts a unique approach and pushes the comparison of screenshots to the Android device itself, a different take compared to Shot, which also depends on Android/Instrumentation. Which problems do Dropbox Engineers want to solve with this design decision?

There are at least two of them. Firstly, when reviewing the required configuration for Shot, we can spot an implementation detail leaking when using Shot with ActivityScenario, as per the explanation in Shot’s documentation. The same issue does not exist with Dropshots since the process that drives the test runs the image comparison on top of Differ.

Secondly, the approach used by Dropshots bundles screenshots into the instrumentation APK via its Gradle plugin. Therefore, while Shot effectively moves meaningful chunks of data over ADB twice (once for pushing the tests to the device, another for pulling generated screenshots from the device), Dropshots does that only once. Such an outcome eventually speeds up the overall test execution process.

After having a quick chat with Ryan Harter, one of Dropshot’s authors, I learned about a few extra goodies delivered by Differ, the Kotlin Multiplatform library used to drive the image comparison. Differ implements a technique to fight false positives derived from scenarios like font anti-aliasing interfering in the screenshot comparison, along with the traditional pixel-by-pixel and color evaluations. Such extra check helps to make images sourced from Android Emulators more portable between different hardware.

The non-obvious trade-off here is that the instrumentation APK might grow several Megabytes according to the number of screenshots to bundle, which eventually requires observing the amount of internal storage available in the target device beforehand.

Final Remarks#

At this point, it should be clear that there is no obvious winner when evaluating available libraries for screenshot testing in Android.

Paparazzi seems to be the option that gets most of the right. Still, Square/Cashapp folks say that it is

“an Android library to render your application screens without a physical device or emulator”

not a screenshot testing library per se.

The wording is not accidental, in my opinion. This means Paparazzi’s authors know that using it as a screenshot testing solution means trusting the accuracy of Android Studio previews to drive a quality check, which is debatable given the value proposition of screenshot tests.

I see Dropshots as significantly improvement for screenshot testing on top of Android/Instrumentation compared to Shot, with a friendly setup, out-of-the-box ActivityScenario support, and expected fastest executions. However, it lacks some features like side-by-side diffs in HTML reports.

However, whether an effective screenshot testing strategy for Android eventually requires sticking with only one particular tool and its trade-offs is worth questioning. As Ryan shared with me, a dual approach combining Paparazzi for fast Developer feedback and Dropshots for solid visual regression catching is not only doable but eventually desirable.

To conclude, although screenshot testing in Android is still an open problem given the current status quo, it has started to capture Google’s attention, as per the latest developments in Robolectric and the new native graphics API.

There are already experiments using this new capability to drive screenshot testing like Paparazzi does, without the onerous requirement of an Android device at testing time. Maybe this new capability will bring the solution we need: fast, reliable, portable, parameterizable visual comparisons for snapshots of our Views. 🔥

If you have made it this far, I’d like to thank you for reading! I also share a few links you might be interested:

- Sergio Sastre talk on Android screenshot testing from Droidcon London, 2021

- John Rodriguez talk on Paparazzi from Droidcon New York, 2022

- Blog posts from Sergio Sastre on screenshot testing

- Screenshot Testing our Design System on Android, from Gojek team

- How Screenshot Tests Elevate our Android Testing Strategy from GetYourGuide team

- Snapshot Testing in Android app using Shot library from Sampingan team

- Why go with Paparazzi? Our journey with Android Screenshot Testing from JobAndTalent team

Acknowledgments#

I’d like to thank Ryan Harter for proof-reading this article and providing valuable feedback.